Cookbook¶

This is a repository for short and sweet examples and links for useful pandas recipes. We encourage users to add to this documentation.

This is a great First Pull Request (to add interesting links and/or put short code inline for existing links)

Idioms¶

These are some neat pandas idioms

How to split a frame with a boolean criterion?

How to select from a frame with complex criteria?

Select rows closest to a user-defined number

How to reduce a sequence (e.g. of Series) using a binary operator

Selection¶

The indexing docs.

Indexing using both row labels and conditionals

Use loc for label-oriented slicing and iloc positional slicing

Mask a panel by using np.where and then reconstructing the panel with the new masked values

Using ~ to take the complement of a boolean array, see

MultiIndexing¶

The multindexing docs.

Missing Data¶

The missing data docs.

Fill forward a reversed timeseries

In [1]: df = pd.DataFrame(np.random.randn(6,1), index=pd.date_range('2013-08-01', periods=6, freq='B'), columns=list('A'))

In [2]: df.ix[3,'A'] = np.nan

In [3]: df

Out[3]:

A

2013-08-01 0.469112

2013-08-02 -0.282863

2013-08-05 -1.509059

2013-08-06 NaN

2013-08-07 1.212112

2013-08-08 -0.173215

In [4]: df.reindex(df.index[::-1]).ffill()

Out[4]:

A

2013-08-08 -0.173215

2013-08-07 1.212112

2013-08-06 1.212112

2013-08-05 -1.509059

2013-08-02 -0.282863

2013-08-01 0.469112

Replace¶

Grouping¶

The grouping docs.

Apply to different items in a group

Replacing values with groupby means

Sort by group with aggregation

Create multiple aggregated columns

Create a value counts column and reassign back to the DataFrame

Shift groups of the values in a column based on the index

In [5]: df = pd.DataFrame(

...: {u'line_race': [10L, 10L, 8L, 10L, 10L, 8L],

...: u'beyer': [99L, 102L, 103L, 103L, 88L, 100L]},

...: index=[u'Last Gunfighter', u'Last Gunfighter', u'Last Gunfighter',

...: u'Paynter', u'Paynter', u'Paynter']); df

...:

Out[5]:

beyer line_race

Last Gunfighter 99 10

Last Gunfighter 102 10

Last Gunfighter 103 8

Paynter 103 10

Paynter 88 10

Paynter 100 8

In [6]: df['beyer_shifted'] = df.groupby(level=0)['beyer'].shift(1)

In [7]: df

Out[7]:

beyer line_race beyer_shifted

Last Gunfighter 99 10 NaN

Last Gunfighter 102 10 99

Last Gunfighter 103 8 102

Paynter 103 10 NaN

Paynter 88 10 103

Paynter 100 8 88

Splitting¶

Timeseries¶

Constructing a datetime range that excludes weekends and includes only certain times

Turn a matrix with hours in columns and days in rows into a continuous row sequence in the form of a time series. How to rearrange a python pandas DataFrame?

Dealing with duplicates when reindexing a timeseries to a specified frequency

Calculate the first day of the month for each entry in a DatetimeIndex

In [8]: dates = pd.date_range('2000-01-01', periods=5)

In [9]: dates.to_period(freq='M').to_timestamp()

Out[9]:

<class 'pandas.tseries.index.DatetimeIndex'>

[2000-01-01, ..., 2000-01-01]

Length: 5, Freq: None, Timezone: None

Plotting¶

The Plotting docs.

Setting x-axis major and minor labels

Plotting multiple charts in an ipython notebook

Annotate a time-series plot #2

Generate Embedded plots in excel files using Pandas, Vincent and xlsxwriter

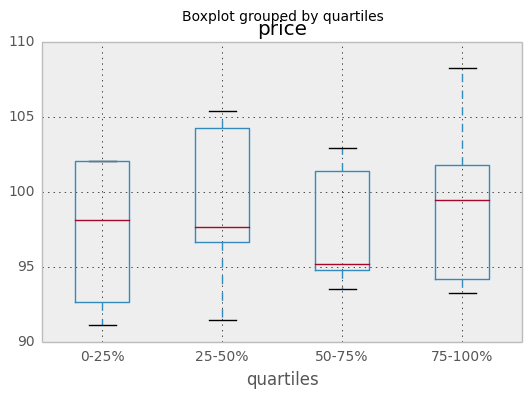

Boxplot for each quartile of a stratifying variable

In [10]: df = pd.DataFrame(

....: {u'stratifying_var': np.random.uniform(0, 100, 20),

....: u'price': np.random.normal(100, 5, 20)}

....: )

....:

In [11]: df[u'quartiles'] = pd.qcut(

....: df[u'stratifying_var'],

....: 4,

....: labels=[u'0-25%', u'25-50%', u'50-75%', u'75-100%']

....: )

....:

In [12]: df.boxplot(column=u'price', by=u'quartiles')

Out[12]: <matplotlib.axes.AxesSubplot at 0xaa40108c>

Data In/Out¶

Performance comparison of SQL vs HDF5

CSV¶

The CSV docs

Reading only certain rows of a csv chunk-by-chunk

Reading the first few lines of a frame

Reading a file that is compressed but not by gzip/bz2 (the native compressed formats which read_csv understands). This example shows a WinZipped file, but is a general application of opening the file within a context manager and using that handle to read. See here

Reading CSV with Unix timestamps and converting to local timezone

Write a multi-row index CSV without writing duplicates

Parsing date components in multi-columns is faster with a format

In [30]: i = pd.date_range('20000101',periods=10000)

In [31]: df = pd.DataFrame(dict(year = i.year, month = i.month, day = i.day))

In [32]: df.head()

Out[32]:

day month year

0 1 1 2000

1 2 1 2000

2 3 1 2000

3 4 1 2000

4 5 1 2000

In [33]: %timeit pd.to_datetime(df.year*10000+df.month*100+df.day,format='%Y%m%d')

100 loops, best of 3: 7.08 ms per loop

# simulate combinging into a string, then parsing

In [34]: ds = df.apply(lambda x: "%04d%02d%02d" % (x['year'],x['month'],x['day']),axis=1)

In [35]: ds.head()

Out[35]:

0 20000101

1 20000102

2 20000103

3 20000104

4 20000105

dtype: object

In [36]: %timeit pd.to_datetime(ds)

1 loops, best of 3: 488 ms per loop

Excel¶

The Excel docs

Reading from a filelike handle

Reading HTML tables from a server that cannot handle the default request header

HDFStore¶

The HDFStores docs

Simple Queries with a Timestamp Index

Managing heterogeneous data using a linked multiple table hierarchy

Merging on-disk tables with millions of rows

Deduplicating a large store by chunks, essentially a recursive reduction operation. Shows a function for taking in data from csv file and creating a store by chunks, with date parsing as well. See here

Creating a store chunk-by-chunk from a csv file

Appending to a store, while creating a unique index

Reading in a sequence of files, then providing a global unique index to a store while appending

Hierarchical queries on a HDFStore

Troubleshoot HDFStore exceptions

Setting min_itemsize with strings

Using ptrepack to create a completely-sorted-index on a store

Storing Attributes to a group node

In [13]: df = DataFrame(np.random.randn(8,3))

In [14]: store = HDFStore('test.h5')

In [15]: store.put('df',df)

# you can store an arbitrary python object via pickle

In [16]: store.get_storer('df').attrs.my_attribute = dict(A = 10)

In [17]: store.get_storer('df').attrs.my_attribute

Out[17]: {'A': 10}

Binary Files¶

pandas readily accepts numpy record arrays, if you need to read in a binary file consisting of an array of C structs. For example, given this C program in a file called main.c compiled with gcc main.c -std=gnu99 on a 64-bit machine,

#include <stdio.h>

#include <stdint.h>

typedef struct _Data

{

int32_t count;

double avg;

float scale;

} Data;

int main(int argc, const char *argv[])

{

size_t n = 10;

Data d[n];

for (int i = 0; i < n; ++i)

{

d[i].count = i;

d[i].avg = i + 1.0;

d[i].scale = (float) i + 2.0f;

}

FILE *file = fopen("binary.dat", "wb");

fwrite(&d, sizeof(Data), n, file);

fclose(file);

return 0;

}

the following Python code will read the binary file 'binary.dat' into a pandas DataFrame, where each element of the struct corresponds to a column in the frame:

import numpy as np

from pandas import DataFrame

names = 'count', 'avg', 'scale'

# note that the offsets are larger than the size of the type because of

# struct padding

offsets = 0, 8, 16

formats = 'i4', 'f8', 'f4'

dt = np.dtype({'names': names, 'offsets': offsets, 'formats': formats},

align=True)

df = DataFrame(np.fromfile('binary.dat', dt))

Note

The offsets of the structure elements may be different depending on the architecture of the machine on which the file was created. Using a raw binary file format like this for general data storage is not recommended, as it is not cross platform. We recommended either HDF5 or msgpack, both of which are supported by pandas’ IO facilities.

Aliasing Axis Names¶

To globally provide aliases for axis names, one can define these 2 functions:

In [18]: def set_axis_alias(cls, axis, alias):

....: if axis not in cls._AXIS_NUMBERS:

....: raise Exception("invalid axis [%s] for alias [%s]" % (axis, alias))

....: cls._AXIS_ALIASES[alias] = axis

....:

In [19]: def clear_axis_alias(cls, axis, alias):

....: if axis not in cls._AXIS_NUMBERS:

....: raise Exception("invalid axis [%s] for alias [%s]" % (axis, alias))

....: cls._AXIS_ALIASES.pop(alias,None)

....:

In [20]: set_axis_alias(DataFrame,'columns', 'myaxis2')

In [21]: df2 = DataFrame(randn(3,2),columns=['c1','c2'],index=['i1','i2','i3'])

In [22]: df2.sum(axis='myaxis2')

Out[22]:

i1 -1.335466

i2 -1.032281

i3 -0.488638

dtype: float64

In [23]: clear_axis_alias(DataFrame,'columns', 'myaxis2')

Creating Example Data¶

To create a dataframe from every combination of some given values, like R’s expand.grid() function, we can create a dict where the keys are column names and the values are lists of the data values:

In [24]: import itertools

In [25]: def expand_grid(data_dict):

....: rows = itertools.product(*data_dict.values())

....: return pd.DataFrame.from_records(rows, columns=data_dict.keys())

....:

In [26]: df = expand_grid(

....: {'height': [60, 70],

....: 'weight': [100, 140, 180],

....: 'sex': ['Male', 'Female']}

....: )

....:

In [27]: df

Out[27]:

sex weight height

0 Male 100 60

1 Male 100 70

2 Male 140 60

3 Male 140 70

4 Male 180 60

5 Male 180 70

6 Female 100 60

7 Female 100 70

8 Female 140 60

9 Female 140 70

10 Female 180 60

11 Female 180 70