Intro to Data Structures¶

We’ll start with a quick, non-comprehensive overview of the fundamental data structures in pandas to get you started. The fundamental behavior about data types, indexing, and axis labeling / alignment apply across all of the objects. To get started, import numpy and load pandas into your namespace:

In [1]: import numpy as np

# will use a lot in examples

In [2]: randn = np.random.randn

In [3]: from pandas import *

Here is a basic tenet to keep in mind: data alignment is intrinsic. The link between labels and data will not be broken unless done so explicitly by you.

We’ll give a brief intro to the data structures, then consider all of the broad categories of functionality and methods in separate sections.

When using pandas, we recommend the following import convention:

import pandas as pd

Series¶

Warning

In 0.13.0 Series has internaly been refactored to no longer sub-class ndarray but instead subclass NDFrame, similarly to the rest of the pandas containers. This should be a transparent change with only very limited API implications (See the Internal Refactoring)

Series is a one-dimensional labeled array capable of holding any data type (integers, strings, floating point numbers, Python objects, etc.). The axis labels are collectively referred to as the index. The basic method to create a Series is to call:

>>> s = Series(data, index=index)

Here, data can be many different things:

- a Python dict

- an ndarray

- a scalar value (like 5)

The passed index is a list of axis labels. Thus, this separates into a few cases depending on what data is:

From ndarray

If data is an ndarray, index must be the same length as data. If no index is passed, one will be created having values [0, ..., len(data) - 1].

In [4]: s = Series(randn(5), index=['a', 'b', 'c', 'd', 'e'])

In [5]: s

Out[5]:

a -2.783

b 0.426

c -0.650

d 1.146

e -0.663

dtype: float64

In [6]: s.index

Out[6]: Index([u'a', u'b', u'c', u'd', u'e'], dtype='object')

In [7]: Series(randn(5))

Out[7]:

0 0.294

1 -0.405

2 1.167

3 0.842

4 0.540

dtype: float64

Note

Starting in v0.8.0, pandas supports non-unique index values. If an operation that does not support duplicate index values is attempted, an exception will be raised at that time. The reason for being lazy is nearly all performance-based (there are many instances in computations, like parts of GroupBy, where the index is not used).

From dict

If data is a dict, if index is passed the values in data corresponding to the labels in the index will be pulled out. Otherwise, an index will be constructed from the sorted keys of the dict, if possible.

In [8]: d = {'a' : 0., 'b' : 1., 'c' : 2.}

In [9]: Series(d)

Out[9]:

a 0

b 1

c 2

dtype: float64

In [10]: Series(d, index=['b', 'c', 'd', 'a'])

Out[10]:

b 1

c 2

d NaN

a 0

dtype: float64

Note

NaN (not a number) is the standard missing data marker used in pandas

From scalar value If data is a scalar value, an index must be provided. The value will be repeated to match the length of index

In [11]: Series(5., index=['a', 'b', 'c', 'd', 'e'])

Out[11]:

a 5

b 5

c 5

d 5

e 5

dtype: float64

Series is ndarray-like¶

Series acts very similarly to a ndarray, and is a valid argument to most NumPy functions. However, things like slicing also slice the index.

In [12]: s[0]

Out[12]: -2.7827595933769937

In [13]: s[:3]

Out[13]:

a -2.783

b 0.426

c -0.650

dtype: float64

In [14]: s[s > s.median()]

Out[14]:

b 0.426

d 1.146

dtype: float64

In [15]: s[[4, 3, 1]]

Out[15]:

e -0.663

d 1.146

b 0.426

dtype: float64

In [16]: np.exp(s)

Out[16]:

a 0.062

b 1.532

c 0.522

d 3.147

e 0.515

dtype: float64

We will address array-based indexing in a separate section.

Series is dict-like¶

A Series is like a fixed-size dict in that you can get and set values by index label:

In [17]: s['a']

Out[17]: -2.7827595933769937

In [18]: s['e'] = 12.

In [19]: s

Out[19]:

a -2.783

b 0.426

c -0.650

d 1.146

e 12.000

dtype: float64

In [20]: 'e' in s

Out[20]: True

In [21]: 'f' in s

Out[21]: False

If a label is not contained, an exception is raised:

>>> s['f']

KeyError: 'f'

Using the get method, a missing label will return None or specified default:

In [22]: s.get('f')

In [23]: s.get('f', np.nan)

Out[23]: nan

See also the section on attribute access.

Vectorized operations and label alignment with Series¶

When doing data analysis, as with raw NumPy arrays looping through Series value-by-value is usually not necessary. Series can be also be passed into most NumPy methods expecting an ndarray.

In [24]: s + s

Out[24]:

a -5.566

b 0.853

c -1.301

d 2.293

e 24.000

dtype: float64

In [25]: s * 2

Out[25]:

a -5.566

b 0.853

c -1.301

d 2.293

e 24.000

dtype: float64

In [26]: np.exp(s)

Out[26]:

a 0.062

b 1.532

c 0.522

d 3.147

e 162754.791

dtype: float64

A key difference between Series and ndarray is that operations between Series automatically align the data based on label. Thus, you can write computations without giving consideration to whether the Series involved have the same labels.

In [27]: s[1:] + s[:-1]

Out[27]:

a NaN

b 0.853

c -1.301

d 2.293

e NaN

dtype: float64

The result of an operation between unaligned Series will have the union of the indexes involved. If a label is not found in one Series or the other, the result will be marked as missing NaN. Being able to write code without doing any explicit data alignment grants immense freedom and flexibility in interactive data analysis and research. The integrated data alignment features of the pandas data structures set pandas apart from the majority of related tools for working with labeled data.

Note

In general, we chose to make the default result of operations between differently indexed objects yield the union of the indexes in order to avoid loss of information. Having an index label, though the data is missing, is typically important information as part of a computation. You of course have the option of dropping labels with missing data via the dropna function.

Name attribute¶

Series can also have a name attribute:

In [28]: s = Series(np.random.randn(5), name='something')

In [29]: s

Out[29]:

0 0.541

1 -1.175

2 0.129

3 0.043

4 -0.429

Name: something, dtype: float64

In [30]: s.name

Out[30]: 'something'

The Series name will be assigned automatically in many cases, in particular when taking 1D slices of DataFrame as you will see below.

DataFrame¶

DataFrame is a 2-dimensional labeled data structure with columns of potentially different types. You can think of it like a spreadsheet or SQL table, or a dict of Series objects. It is generally the most commonly used pandas object. Like Series, DataFrame accepts many different kinds of input:

- Dict of 1D ndarrays, lists, dicts, or Series

- 2-D numpy.ndarray

- Structured or record ndarray

- A Series

- Another DataFrame

Along with the data, you can optionally pass index (row labels) and columns (column labels) arguments. If you pass an index and / or columns, you are guaranteeing the index and / or columns of the resulting DataFrame. Thus, a dict of Series plus a specific index will discard all data not matching up to the passed index.

If axis labels are not passed, they will be constructed from the input data based on common sense rules.

From dict of Series or dicts¶

The result index will be the union of the indexes of the various Series. If there are any nested dicts, these will be first converted to Series. If no columns are passed, the columns will be the sorted list of dict keys.

In [31]: d = {'one' : Series([1., 2., 3.], index=['a', 'b', 'c']),

....: 'two' : Series([1., 2., 3., 4.], index=['a', 'b', 'c', 'd'])}

....:

In [32]: df = DataFrame(d)

In [33]: df

Out[33]:

one two

a 1 1

b 2 2

c 3 3

d NaN 4

In [34]: DataFrame(d, index=['d', 'b', 'a'])

Out[34]:

one two

d NaN 4

b 2 2

a 1 1

In [35]: DataFrame(d, index=['d', 'b', 'a'], columns=['two', 'three'])

Out[35]:

two three

d 4 NaN

b 2 NaN

a 1 NaN

The row and column labels can be accessed respectively by accessing the index and columns attributes:

Note

When a particular set of columns is passed along with a dict of data, the passed columns override the keys in the dict.

In [36]: df.index

Out[36]: Index([u'a', u'b', u'c', u'd'], dtype='object')

In [37]: df.columns

Out[37]: Index([u'one', u'two'], dtype='object')

From dict of ndarrays / lists¶

The ndarrays must all be the same length. If an index is passed, it must clearly also be the same length as the arrays. If no index is passed, the result will be range(n), where n is the array length.

In [38]: d = {'one' : [1., 2., 3., 4.],

....: 'two' : [4., 3., 2., 1.]}

....:

In [39]: DataFrame(d)

Out[39]:

one two

0 1 4

1 2 3

2 3 2

3 4 1

In [40]: DataFrame(d, index=['a', 'b', 'c', 'd'])

Out[40]:

one two

a 1 4

b 2 3

c 3 2

d 4 1

From structured or record array¶

This case is handled identically to a dict of arrays.

In [41]: data = np.zeros((2,),dtype=[('A', 'i4'),('B', 'f4'),('C', 'a10')])

In [42]: data[:] = [(1,2.,'Hello'),(2,3.,"World")]

In [43]: DataFrame(data)

Out[43]:

A B C

0 1 2 Hello

1 2 3 World

In [44]: DataFrame(data, index=['first', 'second'])

Out[44]:

A B C

first 1 2 Hello

second 2 3 World

In [45]: DataFrame(data, columns=['C', 'A', 'B'])

Out[45]:

C A B

0 Hello 1 2

1 World 2 3

Note

DataFrame is not intended to work exactly like a 2-dimensional NumPy ndarray.

From a list of dicts¶

In [46]: data2 = [{'a': 1, 'b': 2}, {'a': 5, 'b': 10, 'c': 20}]

In [47]: DataFrame(data2)

Out[47]:

a b c

0 1 2 NaN

1 5 10 20

In [48]: DataFrame(data2, index=['first', 'second'])

Out[48]:

a b c

first 1 2 NaN

second 5 10 20

In [49]: DataFrame(data2, columns=['a', 'b'])

Out[49]:

a b

0 1 2

1 5 10

From a dict of tuples¶

You can automatically create a multi-indexed frame by passing a tuples dictionary

In [50]: DataFrame({('a', 'b'): {('A', 'B'): 1, ('A', 'C'): 2},

....: ('a', 'a'): {('A', 'C'): 3, ('A', 'B'): 4},

....: ('a', 'c'): {('A', 'B'): 5, ('A', 'C'): 6},

....: ('b', 'a'): {('A', 'C'): 7, ('A', 'B'): 8},

....: ('b', 'b'): {('A', 'D'): 9, ('A', 'B'): 10}})

....:

Out[50]:

a b

a b c a b

A B 4 1 5 8 10

C 3 2 6 7 NaN

D NaN NaN NaN NaN 9

From a Series¶

The result will be a DataFrame with the same index as the input Series, and with one column whose name is the original name of the Series (only if no other column name provided).

Missing Data

Much more will be said on this topic in the Missing data section. To construct a DataFrame with missing data, use np.nan for those values which are missing. Alternatively, you may pass a numpy.MaskedArray as the data argument to the DataFrame constructor, and its masked entries will be considered missing.

Alternate Constructors¶

DataFrame.from_dict

DataFrame.from_dict takes a dict of dicts or a dict of array-like sequences and returns a DataFrame. It operates like the DataFrame constructor except for the orient parameter which is 'columns' by default, but which can be set to 'index' in order to use the dict keys as row labels.

DataFrame.from_records

DataFrame.from_records takes a list of tuples or an ndarray with structured dtype. Works analogously to the normal DataFrame constructor, except that index maybe be a specific field of the structured dtype to use as the index. For example:

In [51]: data

Out[51]:

array([(1, 2.0, 'Hello'), (2, 3.0, 'World')],

dtype=[('A', '<i4'), ('B', '<f4'), ('C', 'S10')])

In [52]: DataFrame.from_records(data, index='C')

Out[52]:

A B

C

Hello 1 2

World 2 3

DataFrame.from_items

DataFrame.from_items works analogously to the form of the dict constructor that takes a sequence of (key, value) pairs, where the keys are column (or row, in the case of orient='index') names, and the value are the column values (or row values). This can be useful for constructing a DataFrame with the columns in a particular order without having to pass an explicit list of columns:

In [53]: DataFrame.from_items([('A', [1, 2, 3]), ('B', [4, 5, 6])])

Out[53]:

A B

0 1 4

1 2 5

2 3 6

If you pass orient='index', the keys will be the row labels. But in this case you must also pass the desired column names:

In [54]: DataFrame.from_items([('A', [1, 2, 3]), ('B', [4, 5, 6])],

....: orient='index', columns=['one', 'two', 'three'])

....:

Out[54]:

one two three

A 1 2 3

B 4 5 6

Column selection, addition, deletion¶

You can treat a DataFrame semantically like a dict of like-indexed Series objects. Getting, setting, and deleting columns works with the same syntax as the analogous dict operations:

In [55]: df['one']

Out[55]:

a 1

b 2

c 3

d NaN

Name: one, dtype: float64

In [56]: df['three'] = df['one'] * df['two']

In [57]: df['flag'] = df['one'] > 2

In [58]: df

Out[58]:

one two three flag

a 1 1 1 False

b 2 2 4 False

c 3 3 9 True

d NaN 4 NaN False

Columns can be deleted or popped like with a dict:

In [59]: del df['two']

In [60]: three = df.pop('three')

In [61]: df

Out[61]:

one flag

a 1 False

b 2 False

c 3 True

d NaN False

When inserting a scalar value, it will naturally be propagated to fill the column:

In [62]: df['foo'] = 'bar'

In [63]: df

Out[63]:

one flag foo

a 1 False bar

b 2 False bar

c 3 True bar

d NaN False bar

When inserting a Series that does not have the same index as the DataFrame, it will be conformed to the DataFrame’s index:

In [64]: df['one_trunc'] = df['one'][:2]

In [65]: df

Out[65]:

one flag foo one_trunc

a 1 False bar 1

b 2 False bar 2

c 3 True bar NaN

d NaN False bar NaN

You can insert raw ndarrays but their length must match the length of the DataFrame’s index.

By default, columns get inserted at the end. The insert function is available to insert at a particular location in the columns:

In [66]: df.insert(1, 'bar', df['one'])

In [67]: df

Out[67]:

one bar flag foo one_trunc

a 1 1 False bar 1

b 2 2 False bar 2

c 3 3 True bar NaN

d NaN NaN False bar NaN

Assigning New Columns in Method Chains¶

New in version 0.16.0.

Inspired by dplyr’s mutate verb, DataFrame has an assign() method that allows you to easily create new columns that are potentially derived from existing columns.

In [68]: iris = read_csv('data/iris.data')

In [69]: iris.head()

Out[69]:

SepalLength SepalWidth PetalLength PetalWidth Name

0 5.1 3.5 1.4 0.2 Iris-setosa

1 4.9 3.0 1.4 0.2 Iris-setosa

2 4.7 3.2 1.3 0.2 Iris-setosa

3 4.6 3.1 1.5 0.2 Iris-setosa

4 5.0 3.6 1.4 0.2 Iris-setosa

In [70]: (iris.assign(sepal_ratio = iris['SepalWidth'] / iris['SepalLength'])

....: .head())

....:

Out[70]:

SepalLength SepalWidth PetalLength PetalWidth Name sepal_ratio

0 5.1 3.5 1.4 0.2 Iris-setosa 0.686

1 4.9 3.0 1.4 0.2 Iris-setosa 0.612

2 4.7 3.2 1.3 0.2 Iris-setosa 0.681

3 4.6 3.1 1.5 0.2 Iris-setosa 0.674

4 5.0 3.6 1.4 0.2 Iris-setosa 0.720

Above was an example of inserting a precomputed value. We can also pass in a function of one argument to be evalutated on the DataFrame being assigned to.

In [71]: iris.assign(sepal_ratio = lambda x: (x['SepalWidth'] /

....: x['SepalLength'])).head()

....:

Out[71]:

SepalLength SepalWidth PetalLength PetalWidth Name sepal_ratio

0 5.1 3.5 1.4 0.2 Iris-setosa 0.686

1 4.9 3.0 1.4 0.2 Iris-setosa 0.612

2 4.7 3.2 1.3 0.2 Iris-setosa 0.681

3 4.6 3.1 1.5 0.2 Iris-setosa 0.674

4 5.0 3.6 1.4 0.2 Iris-setosa 0.720

assign always returns a copy of the data, leaving the original DataFrame untouched.

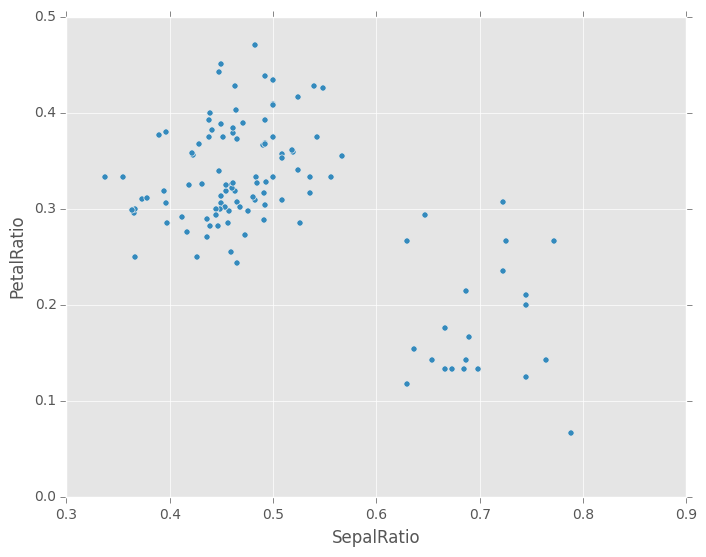

Passing a callable, as opposed to an actual value to be inserted, is useful when you don’t have a reference to the DataFrame at hand. This is common when using assign in chains of operations. For example, we can limit the DataFrame to just those observations with a Sepal Length greater than 5, calculate the ratio, and plot:

In [72]: (iris.query('SepalLength > 5')

....: .assign(SepalRatio = lambda x: x.SepalWidth / x.SepalLength,

....: PetalRatio = lambda x: x.PetalWidth / x.PetalLength)

....: .plot(kind='scatter', x='SepalRatio', y='PetalRatio'))

....:

Out[72]: <matplotlib.axes._subplots.AxesSubplot at 0xa63e2c4c>

Since a function is passed in, the function is computed on the DataFrame being assigned to. Importantly, this is the DataFrame that’s been filtered to those rows with sepal length greater than 5. The filtering happens first, and then the ratio calculations. This is an example where we didn’t have a reference to the filtered DataFrame available.

The function signature for assign is simply **kwargs. The keys are the column names for the new fields, and the values are either a value to be inserted (for example, a Series or NumPy array), or a function of one argument to be called on the DataFrame. A copy of the original DataFrame is returned, with the new values inserted.

Warning

Since the function signature of assign is **kwargs, a dictionary, the order of the new columns in the resulting DataFrame cannot be guaranteed to match the order you pass in. To make things predictable, items are inserted alphabetically (by key) at the end of the DataFrame.

All expressions are computed first, and then assigned. So you can’t refer to another column being assigned in the same call to assign. For example:

In [73]: # Don't do this, bad reference to `C` df.assign(C = lambda x: x['A'] + x['B'], D = lambda x: x['A'] + x['C']) In [2]: # Instead, break it into two assigns (df.assign(C = lambda x: x['A'] + x['B']) .assign(D = lambda x: x['A'] + x['C']))

Indexing / Selection¶

The basics of indexing are as follows:

| Operation | Syntax | Result |

|---|---|---|

| Select column | df[col] | Series |

| Select row by label | df.loc[label] | Series |

| Select row by integer location | df.iloc[loc] | Series |

| Slice rows | df[5:10] | DataFrame |

| Select rows by boolean vector | df[bool_vec] | DataFrame |

Row selection, for example, returns a Series whose index is the columns of the DataFrame:

In [74]: df.loc['b']

Out[74]:

one 2

bar 2

flag False

foo bar

one_trunc 2

Name: b, dtype: object

In [75]: df.iloc[2]

Out[75]:

one 3

bar 3

flag True

foo bar

one_trunc NaN

Name: c, dtype: object

For a more exhaustive treatment of more sophisticated label-based indexing and slicing, see the section on indexing. We will address the fundamentals of reindexing / conforming to new sets of labels in the section on reindexing.

Data alignment and arithmetic¶

Data alignment between DataFrame objects automatically align on both the columns and the index (row labels). Again, the resulting object will have the union of the column and row labels.

In [76]: df = DataFrame(randn(10, 4), columns=['A', 'B', 'C', 'D'])

In [77]: df2 = DataFrame(randn(7, 3), columns=['A', 'B', 'C'])

In [78]: df + df2

Out[78]:

A B C D

0 -1.916 -0.986 -2.421e+00 NaN

1 0.965 1.677 3.298e-01 NaN

2 -1.662 2.197 -1.917e+00 NaN

3 -0.189 0.765 -9.522e-04 NaN

4 -1.076 0.397 -1.177e+00 NaN

5 2.810 -0.179 -5.705e-01 NaN

6 -1.227 0.196 5.312e-01 NaN

7 NaN NaN NaN NaN

8 NaN NaN NaN NaN

9 NaN NaN NaN NaN

When doing an operation between DataFrame and Series, the default behavior is to align the Series index on the DataFrame columns, thus broadcasting row-wise. For example:

In [79]: df - df.iloc[0]

Out[79]:

A B C D

0 0.000 0.000 0.000 0.000

1 2.386 1.358 1.223 -2.107

2 2.105 1.700 1.327 -0.689

3 1.874 2.718 2.382 -0.760

4 2.199 0.966 0.826 0.093

5 4.997 1.197 1.330 -0.285

6 1.263 0.578 1.071 -0.525

7 3.463 0.632 1.063 -0.443

8 2.680 3.163 1.298 -1.818

9 1.304 0.196 3.590 -0.867

In the special case of working with time series data, and the DataFrame index also contains dates, the broadcasting will be column-wise:

In [80]: index = date_range('1/1/2000', periods=8)

In [81]: df = DataFrame(randn(8, 3), index=index, columns=list('ABC'))

In [82]: df

Out[82]:

A B C

2000-01-01 0.063 -0.028 0.444

2000-01-02 -0.269 -1.578 1.850

2000-01-03 0.638 -0.557 -0.071

2000-01-04 -0.511 0.156 -1.076

2000-01-05 1.664 -0.438 -0.077

2000-01-06 0.029 0.179 1.740

2000-01-07 -0.729 -0.898 -0.314

2000-01-08 -0.048 -0.876 0.169

In [83]: type(df['A'])

Out[83]: pandas.core.series.Series

In [84]: df - df['A']

Out[84]:

A B C

2000-01-01 0 -0.091 0.381

2000-01-02 0 -1.309 2.119

2000-01-03 0 -1.195 -0.709

2000-01-04 0 0.668 -0.564

2000-01-05 0 -2.101 -1.741

2000-01-06 0 0.150 1.711

2000-01-07 0 -0.169 0.415

2000-01-08 0 -0.828 0.217

Warning

df - df['A']

is now deprecated and will be removed in a future release. The preferred way to replicate this behavior is

df.sub(df['A'], axis=0)

For explicit control over the matching and broadcasting behavior, see the section on flexible binary operations.

Operations with scalars are just as you would expect:

In [85]: df * 5 + 2

Out[85]:

A B C

2000-01-01 2.314 1.858 4.218

2000-01-02 0.656 -5.888 11.251

2000-01-03 5.190 -0.783 1.644

2000-01-04 -0.557 2.781 -3.378

2000-01-05 10.318 -0.189 1.613

2000-01-06 2.146 2.895 10.700

2000-01-07 -1.645 -2.490 0.429

2000-01-08 1.760 -2.378 2.846

In [86]: 1 / df

Out[86]:

A B C

2000-01-01 15.948 -35.193 2.255

2000-01-02 -3.721 -0.634 0.540

2000-01-03 1.567 -1.797 -14.039

2000-01-04 -1.955 6.398 -0.930

2000-01-05 0.601 -2.285 -12.936

2000-01-06 34.257 5.586 0.575

2000-01-07 -1.372 -1.114 -3.183

2000-01-08 -20.802 -1.142 5.913

In [87]: df ** 4

Out[87]:

A B C

2000-01-01 1.546e-05 6.519e-07 3.871e-02

2000-01-02 5.219e-03 6.195e+00 1.172e+01

2000-01-03 1.657e-01 9.598e-02 2.574e-05

2000-01-04 6.841e-02 5.966e-04 1.339e+00

2000-01-05 7.660e+00 3.671e-02 3.571e-05

2000-01-06 7.261e-07 1.027e-03 9.168e+00

2000-01-07 2.825e-01 6.503e-01 9.747e-03

2000-01-08 5.341e-06 5.878e-01 8.178e-04

Boolean operators work as well:

In [88]: df1 = DataFrame({'a' : [1, 0, 1], 'b' : [0, 1, 1] }, dtype=bool)

In [89]: df2 = DataFrame({'a' : [0, 1, 1], 'b' : [1, 1, 0] }, dtype=bool)

In [90]: df1 & df2

Out[90]:

a b

0 False False

1 False True

2 True False

In [91]: df1 | df2

Out[91]:

a b

0 True True

1 True True

2 True True

In [92]: df1 ^ df2

Out[92]:

a b

0 True True

1 True False

2 False True

In [93]: -df1

Out[93]:

a b

0 False True

1 True False

2 False False

Transposing¶

To transpose, access the T attribute (also the transpose function), similar to an ndarray:

# only show the first 5 rows

In [94]: df[:5].T

Out[94]:

2000-01-01 2000-01-02 2000-01-03 2000-01-04 2000-01-05

A 0.063 -0.269 0.638 -0.511 1.664

B -0.028 -1.578 -0.557 0.156 -0.438

C 0.444 1.850 -0.071 -1.076 -0.077

DataFrame interoperability with NumPy functions¶

Elementwise NumPy ufuncs (log, exp, sqrt, ...) and various other NumPy functions can be used with no issues on DataFrame, assuming the data within are numeric:

In [95]: np.exp(df)

Out[95]:

A B C

2000-01-01 1.065 0.972 1.558

2000-01-02 0.764 0.206 6.361

2000-01-03 1.893 0.573 0.931

2000-01-04 0.600 1.169 0.341

2000-01-05 5.278 0.646 0.926

2000-01-06 1.030 1.196 5.698

2000-01-07 0.482 0.407 0.730

2000-01-08 0.953 0.417 1.184

In [96]: np.asarray(df)

Out[96]:

array([[ 0.0627, -0.0284, 0.4436],

[-0.2688, -1.5776, 1.8502],

[ 0.6381, -0.5566, -0.0712],

[-0.5114, 0.1563, -1.0756],

[ 1.6636, -0.4377, -0.0773],

[ 0.0292, 0.179 , 1.7401],

[-0.729 , -0.898 , -0.3142],

[-0.0481, -0.8756, 0.1691]])

The dot method on DataFrame implements matrix multiplication:

In [97]: df.T.dot(df)

Out[97]:

A B C

A 4.047 -0.039 0.178

B -0.039 4.621 -2.581

C 0.178 -2.581 7.943

Similarly, the dot method on Series implements dot product:

In [98]: s1 = Series(np.arange(5,10))

In [99]: s1.dot(s1)

Out[99]: 255

DataFrame is not intended to be a drop-in replacement for ndarray as its indexing semantics are quite different in places from a matrix.

Console display¶

Very large DataFrames will be truncated to display them in the console. You can also get a summary using info(). (Here I am reading a CSV version of the baseball dataset from the plyr R package):

In [100]: baseball = read_csv('data/baseball.csv')

In [101]: print(baseball)

id player year stint ... hbp sh sf gidp

0 88641 womacto01 2006 2 ... 0 3 0 0

1 88643 schilcu01 2006 1 ... 0 0 0 0

.. ... ... ... ... ... .. .. .. ...

98 89533 aloumo01 2007 1 ... 2 0 3 13

99 89534 alomasa02 2007 1 ... 0 0 0 0

[100 rows x 23 columns]

In [102]: baseball.info()

<class 'pandas.core.frame.DataFrame'>

Int64Index: 100 entries, 0 to 99

Data columns (total 23 columns):

id 100 non-null int64

player 100 non-null object

year 100 non-null int64

stint 100 non-null int64

team 100 non-null object

lg 100 non-null object

g 100 non-null int64

ab 100 non-null int64

r 100 non-null int64

h 100 non-null int64

X2b 100 non-null int64

X3b 100 non-null int64

hr 100 non-null int64

rbi 100 non-null float64

sb 100 non-null float64

cs 100 non-null float64

bb 100 non-null int64

so 100 non-null float64

ibb 100 non-null float64

hbp 100 non-null float64

sh 100 non-null float64

sf 100 non-null float64

gidp 100 non-null float64

dtypes: float64(9), int64(11), object(3)

memory usage: 17.6+ KB

However, using to_string will return a string representation of the DataFrame in tabular form, though it won’t always fit the console width:

In [103]: print(baseball.iloc[-20:, :12].to_string())

id player year stint team lg g ab r h X2b X3b

80 89474 finlest01 2007 1 COL NL 43 94 9 17 3 0

81 89480 embreal01 2007 1 OAK AL 4 0 0 0 0 0

82 89481 edmonji01 2007 1 SLN NL 117 365 39 92 15 2

83 89482 easleda01 2007 1 NYN NL 76 193 24 54 6 0

84 89489 delgaca01 2007 1 NYN NL 139 538 71 139 30 0

85 89493 cormirh01 2007 1 CIN NL 6 0 0 0 0 0

86 89494 coninje01 2007 2 NYN NL 21 41 2 8 2 0

87 89495 coninje01 2007 1 CIN NL 80 215 23 57 11 1

88 89497 clemero02 2007 1 NYA AL 2 2 0 1 0 0

89 89498 claytro01 2007 2 BOS AL 8 6 1 0 0 0

90 89499 claytro01 2007 1 TOR AL 69 189 23 48 14 0

91 89501 cirilje01 2007 2 ARI NL 28 40 6 8 4 0

92 89502 cirilje01 2007 1 MIN AL 50 153 18 40 9 2

93 89521 bondsba01 2007 1 SFN NL 126 340 75 94 14 0

94 89523 biggicr01 2007 1 HOU NL 141 517 68 130 31 3

95 89525 benitar01 2007 2 FLO NL 34 0 0 0 0 0

96 89526 benitar01 2007 1 SFN NL 19 0 0 0 0 0

97 89530 ausmubr01 2007 1 HOU NL 117 349 38 82 16 3

98 89533 aloumo01 2007 1 NYN NL 87 328 51 112 19 1

99 89534 alomasa02 2007 1 NYN NL 8 22 1 3 1 0

New since 0.10.0, wide DataFrames will now be printed across multiple rows by default:

In [104]: DataFrame(randn(3, 12))

Out[104]:

0 1 2 3 4 5 6 \

0 1.225021 -0.528620 0.448676 0.619107 -1.199110 -0.949097 2.169523

1 -1.753617 0.992384 -0.505601 -0.599848 0.133585 0.008836 -1.767710

2 -0.461585 -1.321106 1.745476 1.445100 0.991037 -0.860733 -0.870661

7 8 9 10 11

0 0.302230 0.919516 0.657436 0.262574 -0.804798

1 0.700112 -0.020773 -0.302481 0.347869 0.179123

2 -0.117845 -0.046266 2.095649 -0.524324 -0.610555

You can change how much to print on a single row by setting the display.width option:

In [105]: set_option('display.width', 40) # default is 80

In [106]: DataFrame(randn(3, 12))

Out[106]:

0 1 2 \

0 -1.280951 1.472585 -1.001914

1 0.130529 -1.603771 -0.128830

2 -1.084566 -0.515272 1.367586

3 4 5 \

0 1.044770 -0.050668 -0.013289

1 -1.869301 -0.232977 -0.139801

2 0.963500 0.224105 -0.020051

6 7 8 \

0 -0.291893 2.029038 -1.117195

1 -1.083341 -0.357234 -0.818199

2 0.524663 0.351081 -1.574209

9 10 11

0 1.598577 -0.397325 0.151653

1 -0.886885 1.238885 -1.639274

2 -0.486856 -0.545888 -0.927076

You can also disable this feature via the expand_frame_repr option. This will print the table in one block.

DataFrame column attribute access and IPython completion¶

If a DataFrame column label is a valid Python variable name, the column can be accessed like attributes:

In [107]: df = DataFrame({'foo1' : np.random.randn(5),

.....: 'foo2' : np.random.randn(5)})

.....:

In [108]: df

Out[108]:

foo1 foo2

0 0.909160 1.360298

1 -0.667763 -1.603624

2 -0.101656 -1.648929

3 1.189682 0.145121

4 -0.090648 -2.536359

In [109]: df.foo1

Out[109]:

0 0.909160

1 -0.667763

2 -0.101656

3 1.189682

4 -0.090648

Name: foo1, dtype: float64

The columns are also connected to the IPython completion mechanism so they can be tab-completed:

In [5]: df.fo<TAB>

df.foo1 df.foo2

Panel¶

Panel is a somewhat less-used, but still important container for 3-dimensional data. The term panel data is derived from econometrics and is partially responsible for the name pandas: pan(el)-da(ta)-s. The names for the 3 axes are intended to give some semantic meaning to describing operations involving panel data and, in particular, econometric analysis of panel data. However, for the strict purposes of slicing and dicing a collection of DataFrame objects, you may find the axis names slightly arbitrary:

- items: axis 0, each item corresponds to a DataFrame contained inside

- major_axis: axis 1, it is the index (rows) of each of the DataFrames

- minor_axis: axis 2, it is the columns of each of the DataFrames

Construction of Panels works about like you would expect:

From 3D ndarray with optional axis labels¶

In [110]: wp = Panel(randn(2, 5, 4), items=['Item1', 'Item2'],

.....: major_axis=date_range('1/1/2000', periods=5),

.....: minor_axis=['A', 'B', 'C', 'D'])

.....:

In [111]: wp

Out[111]:

<class 'pandas.core.panel.Panel'>

Dimensions: 2 (items) x 5 (major_axis) x 4 (minor_axis)

Items axis: Item1 to Item2

Major_axis axis: 2000-01-01 00:00:00 to 2000-01-05 00:00:00

Minor_axis axis: A to D

From dict of DataFrame objects¶

In [112]: data = {'Item1' : DataFrame(randn(4, 3)),

.....: 'Item2' : DataFrame(randn(4, 2))}

.....:

In [113]: Panel(data)

Out[113]:

<class 'pandas.core.panel.Panel'>

Dimensions: 2 (items) x 4 (major_axis) x 3 (minor_axis)

Items axis: Item1 to Item2

Major_axis axis: 0 to 3

Minor_axis axis: 0 to 2

Note that the values in the dict need only be convertible to DataFrame. Thus, they can be any of the other valid inputs to DataFrame as per above.

One helpful factory method is Panel.from_dict, which takes a dictionary of DataFrames as above, and the following named parameters:

| Parameter | Default | Description |

|---|---|---|

| intersect | False | drops elements whose indices do not align |

| orient | items | use minor to use DataFrames’ columns as panel items |

For example, compare to the construction above:

In [114]: Panel.from_dict(data, orient='minor')

Out[114]:

<class 'pandas.core.panel.Panel'>

Dimensions: 3 (items) x 4 (major_axis) x 2 (minor_axis)

Items axis: 0 to 2

Major_axis axis: 0 to 3

Minor_axis axis: Item1 to Item2

Orient is especially useful for mixed-type DataFrames. If you pass a dict of DataFrame objects with mixed-type columns, all of the data will get upcasted to dtype=object unless you pass orient='minor':

In [115]: df = DataFrame({'a': ['foo', 'bar', 'baz'],

.....: 'b': np.random.randn(3)})

.....:

In [116]: df

Out[116]:

a b

0 foo -1.264356

1 bar -0.497629

2 baz 1.789719

In [117]: data = {'item1': df, 'item2': df}

In [118]: panel = Panel.from_dict(data, orient='minor')

In [119]: panel['a']

Out[119]:

item1 item2

0 foo foo

1 bar bar

2 baz baz

In [120]: panel['b']

Out[120]:

item1 item2

0 -1.264356 -1.264356

1 -0.497629 -0.497629

2 1.789719 1.789719

In [121]: panel['b'].dtypes

Out[121]:

item1 float64

item2 float64

dtype: object

Note

Unfortunately Panel, being less commonly used than Series and DataFrame, has been slightly neglected feature-wise. A number of methods and options available in DataFrame are not available in Panel. This will get worked on, of course, in future releases. And faster if you join me in working on the codebase.

From DataFrame using to_panel method¶

This method was introduced in v0.7 to replace LongPanel.to_long, and converts a DataFrame with a two-level index to a Panel.

In [122]: midx = MultiIndex(levels=[['one', 'two'], ['x','y']], labels=[[1,1,0,0],[1,0,1,0]])

In [123]: df = DataFrame({'A' : [1, 2, 3, 4], 'B': [5, 6, 7, 8]}, index=midx)

In [124]: df.to_panel()

Out[124]:

<class 'pandas.core.panel.Panel'>

Dimensions: 2 (items) x 2 (major_axis) x 2 (minor_axis)

Items axis: A to B

Major_axis axis: one to two

Minor_axis axis: x to y

Item selection / addition / deletion¶

Similar to DataFrame functioning as a dict of Series, Panel is like a dict of DataFrames:

In [125]: wp['Item1']

Out[125]:

A B C D

2000-01-01 0.835993 -0.621868 -0.173710 -0.174326

2000-01-02 -0.354356 2.090183 -0.736019 -1.250412

2000-01-03 -0.581326 -0.244477 0.917119 0.611695

2000-01-04 -1.576078 -0.528562 -0.704643 -0.481453

2000-01-05 1.085093 -1.229749 2.295679 -1.016910

In [126]: wp['Item3'] = wp['Item1'] / wp['Item2']

The API for insertion and deletion is the same as for DataFrame. And as with DataFrame, if the item is a valid python identifier, you can access it as an attribute and tab-complete it in IPython.

Transposing¶

A Panel can be rearranged using its transpose method (which does not make a copy by default unless the data are heterogeneous):

In [127]: wp.transpose(2, 0, 1)

Out[127]:

<class 'pandas.core.panel.Panel'>

Dimensions: 4 (items) x 3 (major_axis) x 5 (minor_axis)

Items axis: A to D

Major_axis axis: Item1 to Item3

Minor_axis axis: 2000-01-01 00:00:00 to 2000-01-05 00:00:00

Indexing / Selection¶

| Operation | Syntax | Result |

|---|---|---|

| Select item | wp[item] | DataFrame |

| Get slice at major_axis label | wp.major_xs(val) | DataFrame |

| Get slice at minor_axis label | wp.minor_xs(val) | DataFrame |

For example, using the earlier example data, we could do:

In [128]: wp['Item1']

Out[128]:

A B C D

2000-01-01 0.835993 -0.621868 -0.173710 -0.174326

2000-01-02 -0.354356 2.090183 -0.736019 -1.250412

2000-01-03 -0.581326 -0.244477 0.917119 0.611695

2000-01-04 -1.576078 -0.528562 -0.704643 -0.481453

2000-01-05 1.085093 -1.229749 2.295679 -1.016910

In [129]: wp.major_xs(wp.major_axis[2])

Out[129]:

Item1 Item2 Item3

A -0.581326 -1.271582 0.457167

B -0.244477 -0.861256 0.283861

C 0.917119 -0.597879 -1.533955

D 0.611695 -0.118700 -5.153265

In [130]: wp.minor_axis

Out[130]: Index([u'A', u'B', u'C', u'D'], dtype='object')

In [131]: wp.minor_xs('C')

Out[131]:

Item1 Item2 Item3

2000-01-01 -0.173710 2.381645 -0.072937

2000-01-02 -0.736019 -2.413161 0.305002

2000-01-03 0.917119 -0.597879 -1.533955

2000-01-04 -0.704643 -1.536019 0.458746

2000-01-05 2.295679 0.181524 12.646732

Squeezing¶

Another way to change the dimensionality of an object is to squeeze a 1-len object, similar to wp['Item1']

In [132]: wp.reindex(items=['Item1']).squeeze()

Out[132]:

A B C D

2000-01-01 0.835993 -0.621868 -0.173710 -0.174326

2000-01-02 -0.354356 2.090183 -0.736019 -1.250412

2000-01-03 -0.581326 -0.244477 0.917119 0.611695

2000-01-04 -1.576078 -0.528562 -0.704643 -0.481453

2000-01-05 1.085093 -1.229749 2.295679 -1.016910

In [133]: wp.reindex(items=['Item1'],minor=['B']).squeeze()

Out[133]:

2000-01-01 -0.621868

2000-01-02 2.090183

2000-01-03 -0.244477

2000-01-04 -0.528562

2000-01-05 -1.229749

Freq: D, Name: B, dtype: float64

Conversion to DataFrame¶

A Panel can be represented in 2D form as a hierarchically indexed DataFrame. See the section hierarchical indexing for more on this. To convert a Panel to a DataFrame, use the to_frame method:

In [134]: panel = Panel(np.random.randn(3, 5, 4), items=['one', 'two', 'three'],

.....: major_axis=date_range('1/1/2000', periods=5),

.....: minor_axis=['a', 'b', 'c', 'd'])

.....:

In [135]: panel.to_frame()

Out[135]:

one two three

major minor

2000-01-01 a 0.445900 -1.286198 -1.023189

b -0.574496 -0.407154 0.591682

c 0.872979 0.068084 -0.008919

d 0.297255 -2.157051 -0.415572

2000-01-02 a -1.022617 -0.443982 -0.772683

b 1.091870 -0.881639 -0.516197

c 1.831444 0.851834 0.626655

d 1.271808 -1.352515 0.269623

2000-01-03 a -0.472876 0.228761 1.709250

b -0.279340 0.416858 -0.830728

c 0.495966 0.301709 -0.290244

d 0.367858 0.569010 -1.588782

2000-01-04 a -1.530917 -0.047619 0.639406

b -0.285890 0.413370 1.055533

c 0.943062 0.573056 -0.260898

d 1.361752 -0.154419 -0.289725

2000-01-05 a 0.210373 0.987044 0.279621

b -1.945608 0.063191 0.454423

c 2.532409 0.439086 -0.065750

d 0.373819 1.657475 1.465709

Panel4D (Experimental)¶

Panel4D is a 4-Dimensional named container very much like a Panel, but having 4 named dimensions. It is intended as a test bed for more N-Dimensional named containers.

- labels: axis 0, each item corresponds to a Panel contained inside

- items: axis 1, each item corresponds to a DataFrame contained inside

- major_axis: axis 2, it is the index (rows) of each of the DataFrames

- minor_axis: axis 3, it is the columns of each of the DataFrames

Panel4D is a sub-class of Panel, so most methods that work on Panels are applicable to Panel4D. The following methods are disabled:

- join , to_frame , to_excel , to_sparse , groupby

Construction of Panel4D works in a very similar manner to a Panel

From 4D ndarray with optional axis labels¶

In [136]: p4d = Panel4D(randn(2, 2, 5, 4),

.....: labels=['Label1','Label2'],

.....: items=['Item1', 'Item2'],

.....: major_axis=date_range('1/1/2000', periods=5),

.....: minor_axis=['A', 'B', 'C', 'D'])

.....:

In [137]: p4d

Out[137]:

<class 'pandas.core.panelnd.Panel4D'>

Dimensions: 2 (labels) x 2 (items) x 5 (major_axis) x 4 (minor_axis)

Labels axis: Label1 to Label2

Items axis: Item1 to Item2

Major_axis axis: 2000-01-01 00:00:00 to 2000-01-05 00:00:00

Minor_axis axis: A to D

From dict of Panel objects¶

In [138]: data = { 'Label1' : Panel({ 'Item1' : DataFrame(randn(4, 3)) }),

.....: 'Label2' : Panel({ 'Item2' : DataFrame(randn(4, 2)) }) }

.....:

In [139]: Panel4D(data)

Out[139]:

<class 'pandas.core.panelnd.Panel4D'>

Dimensions: 2 (labels) x 2 (items) x 4 (major_axis) x 3 (minor_axis)

Labels axis: Label1 to Label2

Items axis: Item1 to Item2

Major_axis axis: 0 to 3

Minor_axis axis: 0 to 2

Note that the values in the dict need only be convertible to Panels. Thus, they can be any of the other valid inputs to Panel as per above.

Slicing¶

Slicing works in a similar manner to a Panel. [] slices the first dimension. .ix allows you to slice arbitrarily and get back lower dimensional objects

In [140]: p4d['Label1']

Out[140]:

<class 'pandas.core.panel.Panel'>

Dimensions: 2 (items) x 5 (major_axis) x 4 (minor_axis)

Items axis: Item1 to Item2

Major_axis axis: 2000-01-01 00:00:00 to 2000-01-05 00:00:00

Minor_axis axis: A to D

4D -> Panel

In [141]: p4d.ix[:,:,:,'A']

Out[141]:

<class 'pandas.core.panel.Panel'>

Dimensions: 2 (items) x 2 (major_axis) x 5 (minor_axis)

Items axis: Label1 to Label2

Major_axis axis: Item1 to Item2

Minor_axis axis: 2000-01-01 00:00:00 to 2000-01-05 00:00:00

4D -> DataFrame

In [142]: p4d.ix[:,:,0,'A']

Out[142]:

Label1 Label2

Item1 1.127489 0.015494

Item2 -1.650400 0.130533

4D -> Series

In [143]: p4d.ix[:,0,0,'A']

Out[143]:

Label1 1.127489

Label2 0.015494

Name: A, dtype: float64

Transposing¶

A Panel4D can be rearranged using its transpose method (which does not make a copy by default unless the data are heterogeneous):

In [144]: p4d.transpose(3, 2, 1, 0)

Out[144]:

<class 'pandas.core.panelnd.Panel4D'>

Dimensions: 4 (labels) x 5 (items) x 2 (major_axis) x 2 (minor_axis)

Labels axis: A to D

Items axis: 2000-01-01 00:00:00 to 2000-01-05 00:00:00

Major_axis axis: Item1 to Item2

Minor_axis axis: Label1 to Label2

PanelND (Experimental)¶

PanelND is a module with a set of factory functions to enable a user to construct N-dimensional named containers like Panel4D, with a custom set of axis labels. Thus a domain-specific container can easily be created.

The following creates a Panel5D. A new panel type object must be sliceable into a lower dimensional object. Here we slice to a Panel4D.

In [145]: from pandas.core import panelnd

In [146]: Panel5D = panelnd.create_nd_panel_factory(

.....: klass_name = 'Panel5D',

.....: orders = [ 'cool', 'labels','items','major_axis','minor_axis'],

.....: slices = { 'labels' : 'labels', 'items' : 'items',

.....: 'major_axis' : 'major_axis', 'minor_axis' : 'minor_axis' },

.....: slicer = Panel4D,

.....: aliases = { 'major' : 'major_axis', 'minor' : 'minor_axis' },

.....: stat_axis = 2)

.....:

In [147]: p5d = Panel5D(dict(C1 = p4d))

In [148]: p5d

Out[148]:

<class 'pandas.core.panelnd.Panel5D'>

Dimensions: 1 (cool) x 2 (labels) x 2 (items) x 5 (major_axis) x 4 (minor_axis)

Cool axis: C1 to C1

Labels axis: Label1 to Label2

Items axis: Item1 to Item2

Major_axis axis: 2000-01-01 00:00:00 to 2000-01-05 00:00:00

Minor_axis axis: A to D

# print a slice of our 5D

In [149]: p5d.ix['C1',:,:,0:3,:]

Out[149]:

<class 'pandas.core.panelnd.Panel4D'>

Dimensions: 2 (labels) x 2 (items) x 3 (major_axis) x 4 (minor_axis)

Labels axis: Label1 to Label2

Items axis: Item1 to Item2

Major_axis axis: 2000-01-01 00:00:00 to 2000-01-03 00:00:00

Minor_axis axis: A to D

# transpose it

In [150]: p5d.transpose(1,2,3,4,0)

Out[150]:

<class 'pandas.core.panelnd.Panel5D'>

Dimensions: 2 (cool) x 2 (labels) x 5 (items) x 4 (major_axis) x 1 (minor_axis)

Cool axis: Label1 to Label2

Labels axis: Item1 to Item2

Items axis: 2000-01-01 00:00:00 to 2000-01-05 00:00:00

Major_axis axis: A to D

Minor_axis axis: C1 to C1

# look at the shape & dim

In [151]: p5d.shape

Out[151]: (1, 2, 2, 5, 4)

In [152]: p5d.ndim

Out[152]: 5